The Complete Guide to Building GPU Servers

A comprehensive guide to designing, building, and optimizing GPU servers for AI, machine learning, data science and high-performance computing.

A comprehensive guide to designing, building, and optimizing GPU servers for AI, machine learning, data science and high-performance computing.

Building a GPU server isn't like assembling a gaming PC. You're dealing with components that can draw more power than a small house and generate enough heat to warm a room. But get it right, and you'll have a machine that can train AI models, crunch scientific data, or render complex graphics faster than you ever thought possible.

In my quest to build a GPU server myself, I ended up writing this guide covering almost everything from picking the right graphics cards to keeping them cool under heavy loads. We'll walk through hardware selection, power requirements, cooling solutions, and the software setup that makes it all work together.

Why GPU Servers Matter

Graphics Processing Unit (GPU) cards have undergone one of the most dramatic transformations in computing history. What started as specialized chips for rendering 3D graphics has evolved into the backbone of modern artificial intelligence and scientific computing.

In the early 2000s, GPUs were single-purpose devices. They excelled at transforming 3D coordinates into pixels but couldn't do much else. The breakthrough came when researchers realized that the same mathematical operations powering graphics rendering, matrix multiplications and parallel transformations, were exactly what machine learning algorithms needed.

NVIDIA's introduction of CUDA in 2006 marked the turning point. For the first time, developers could harness GPU parallel processing power for general-purpose computing. What followed was an explosion of innovation across multiple fields, from cryptocurrency mining to protein folding research.

The architecture evolution tells the story. Early GPUs had hundreds of simple cores. Today's data center GPUs pack over 10,000 specialized cores, each optimized for different types of mathematical operations. The NVIDIA H100, for example, includes dedicated tensor cores that can perform AI calculations at unprecedented speeds.

The Modern Computing Paradigm Shift

We're witnessing a fundamental shift in how computation works. The traditional model, where powerful CPUs handle sequential processing, is giving way to heterogeneous computing where specialized processors tackle different types of work.

CPUs excel at complex decision-making and sequential tasks. They're like brilliant managers who can handle complicated logic but work on one problem at a time. GPUs are more like massive teams of specialized workers who can tackle thousands of simple tasks simultaneously.

This parallel processing revolution has democratized access to supercomputing power. Tasks that once required million-dollar supercomputers can now run on GPU servers costing tens of thousands. A single modern GPU can deliver more computational power than entire server farms from just a decade ago.

The impact extends beyond raw performance. GPU acceleration has enabled entirely new approaches to problem-solving. Machine learning models that were theoretically possible but computationally impractical suddenly became feasible. This shift has accelerated innovation across every field that deals with large datasets or complex calculations.

Where the GPU Servers’ Power Pays Off

Understanding what GPU servers excel at helps justify the investment and guides your hardware choices. These machines aren't just expensive toys, they're specialized tools that can transform how you approach computationally intensive work.

Artificial Intelligence and Machine Learning

AI training represents the biggest driver of GPU server adoption. Training large language models, computer vision systems, and neural networks requires massive parallel processing power that only GPUs can provide efficiently.

Deep learning frameworks like TensorFlow and PyTorch were built with GPU acceleration in mind. A model that takes weeks to train on CPUs might finish in hours on a well-configured GPU server. The time savings translate directly to faster research cycles and quicker time-to-market for AI products.

Large language models like GPT-4 or Claude require enormous computational resources during training. Even inference (running the trained model) benefits significantly from GPU acceleration, especially for real-time applications like chatbots or voice assistants.

Scientific Computing and Research

Scientific simulations often involve complex mathematical operations that map perfectly to GPU architectures. Weather modeling, climate research, molecular dynamics, and astrophysics simulations can run orders of magnitude faster on GPUs than traditional CPU clusters.

Computational fluid dynamics (CFD) simulations for aerospace and automotive design benefit enormously from GPU acceleration. What once required supercomputer access can now run on a well-designed GPU server in a university lab or engineering department.

Bioinformatics applications like protein folding simulations, genetic sequencing analysis, and drug discovery pipelines leverage GPU parallel processing to handle massive datasets and complex calculations.

Financial Modeling and Risk Analysis

High-frequency trading firms use GPU servers for real-time market analysis and algorithmic trading. The ability to process thousands of market data points simultaneously and execute complex trading strategies in microseconds provides competitive advantages worth millions.

Risk modeling and Monte Carlo simulations for derivatives pricing, portfolio optimization, and regulatory compliance run dramatically faster on GPUs. Banks and hedge funds can perform more sophisticated analysis and respond to market changes more quickly.

Cryptocurrency mining, while controversial, remains a significant GPU server application. Mining operations require massive parallel processing power to solve cryptographic puzzles, making GPUs far more efficient than CPUs for this purpose.

Media and Entertainment

Video rendering and post-production workflows benefit significantly from GPU acceleration. 4K and 8K video editing, 3D animation rendering, and visual effects processing can leverage GPU compute power to reduce rendering times from days to hours.

Game development studios use GPU servers for asset processing, lighting calculations, and automated testing across multiple graphics configurations. The ability to render complex scenes quickly accelerates the development process.

Streaming services use GPU servers for real-time video transcoding, converting content to multiple formats and resolutions simultaneously for different devices and network conditions.

Autonomous Vehicles and Robotics

Self-driving car development requires processing massive amounts of sensor data in real-time. GPU servers handle computer vision tasks like object detection, path planning, and sensor fusion that enable autonomous navigation.

Training autonomous vehicle AI systems requires processing millions of hours of driving data, camera feeds, and sensor readings. GPU servers make this training feasible within reasonable timeframes.

Robotics applications from manufacturing to healthcare use GPU servers for real-time decision making, computer vision, and motion planning. The parallel processing power enables robots to respond to changing environments quickly and safely.

Healthcare and Medical Imaging

Medical imaging applications like MRI reconstruction, CT scan processing, and ultrasound analysis benefit from GPU acceleration. Faster processing means shorter patient wait times and more detailed analysis capabilities.

Drug discovery and pharmaceutical research use GPU servers for molecular modeling, protein interaction analysis, and compound screening. The ability to simulate millions of molecular interactions accelerates the development of new treatments.

Genomics research leverages GPU parallel processing for DNA sequencing analysis, genetic variant identification, and population studies. Processing entire human genomes becomes feasible for routine clinical use.

Cloud Computing and Infrastructure

Cloud service providers use GPU servers to offer AI and machine learning services to customers. AWS, Google Cloud, and Microsoft Azure all provide GPU-accelerated instances for various workloads.

Edge computing deployments use smaller GPU servers to bring AI capabilities closer to data sources. This reduces latency for real-time applications like autonomous vehicles or industrial automation.

Content delivery networks use GPU servers for real-time image and video processing, optimizing content for different devices and network conditions automatically.

Engineering and Design

Computer-aided design (CAD) and computer-aided engineering (CAE) applications benefit from GPU acceleration for complex modeling and simulation tasks. Engineers can iterate designs faster and test more scenarios.

Architectural visualization and building information modeling (BIM) use GPU servers for real-time rendering and virtual reality walkthroughs. Clients can experience designs before construction begins.

Manufacturing simulation and digital twin applications use GPU servers to model entire production lines, optimizing efficiency and predicting maintenance needs.

Cybersecurity and Cryptography

Password cracking and cryptographic analysis for security research benefit from GPU parallel processing. Security professionals can test password strength and identify vulnerabilities more effectively.

Blockchain and cryptocurrency applications beyond mining use GPU servers for smart contract execution, decentralized application hosting, and distributed computing networks.

Network security monitoring and threat detection systems use GPU servers to analyze network traffic patterns and identify anomalies in real-time across large-scale networks.

The Right Use Case for Your Investment

Not every application benefits equally from GPU acceleration. CPU-bound tasks with limited parallelism won't see significant improvements on GPU servers. Sequential processing, simple database operations, and basic web serving typically don't justify GPU investments.

The sweet spot for GPU servers involves applications with high computational requirements that can be parallelized effectively. If your workload involves matrix operations, parallel algorithms, or processing large datasets simultaneously, GPU acceleration likely provides substantial benefits.

Consider the total cost of ownership when evaluating use cases. Applications that run continuously or frequently provide better return on investment than occasional batch jobs that might be better served by cloud GPU instances.

The key is matching your specific workload characteristics to GPU strengths. Parallel processing, floating-point math, and large-scale data processing represent the ideal applications for GPU server investments.

The Democratization of Supercomputing

Perhaps most importantly, GPU servers are democratizing access to supercomputing power. Small research teams, startups, and individual developers can now tackle problems that were once the exclusive domain of large corporations and government labs.

Cloud GPU services have further lowered barriers to entry. A researcher in any part of the world can rent GPU time and access computational resources that rival national laboratories. This democratization is accelerating innovation and enabling discoveries from unexpected sources.

The economic impact is profound. Industries that couldn't justify supercomputing investments can now access GPU power on demand. This accessibility is driving innovation across sectors from agriculture to entertainment.

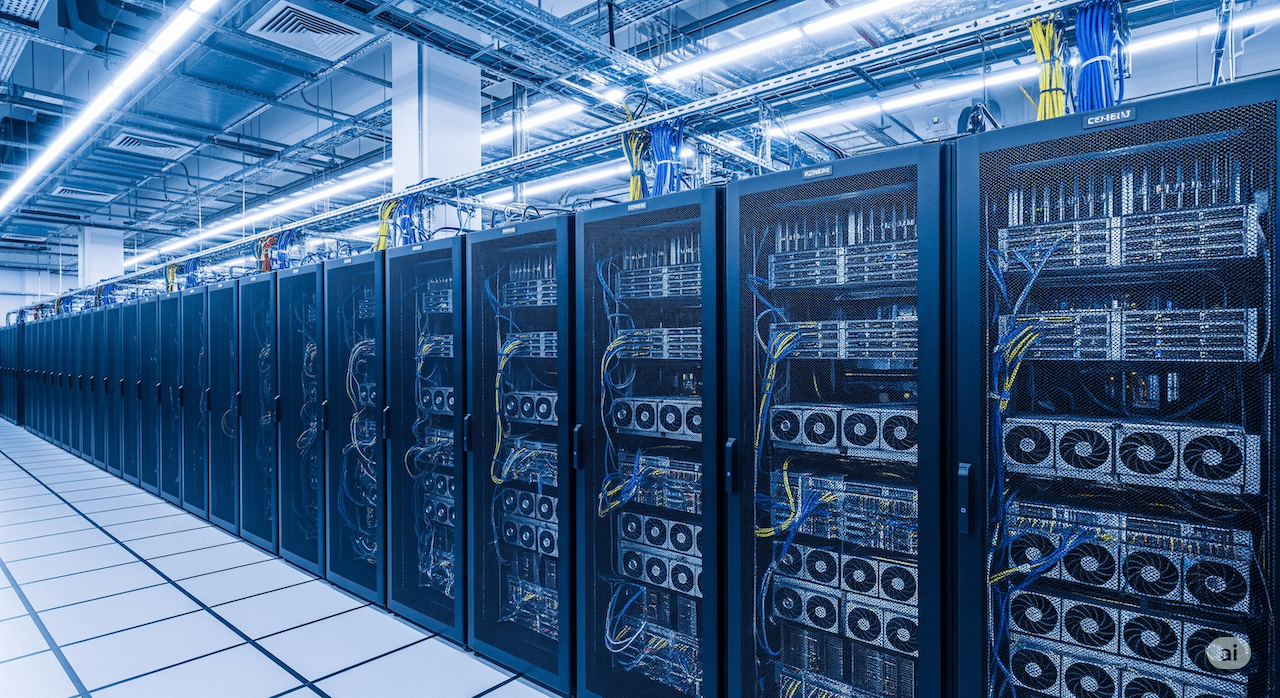

Understanding GPU Server Architecture

A GPU server differs from regular servers in fundamental ways. The graphics cards become the primary compute engines, while the CPU handles coordination and data management. Think of the CPU as a conductor and the GPUs as a very large, very fast orchestra.

Memory architecture becomes critical. Each GPU has its own high-speed memory (VRAM), but moving data between system RAM and GPU memory creates bottlenecks. Smart designs minimize these transfers.

Interconnects matter more than in traditional servers. GPUs need to share data for distributed workloads, so high-bandwidth connections between cards become essential. NVIDIA's NVLink technology provides these fast pathways, allowing GPUs to communicate directly without going through the CPU.

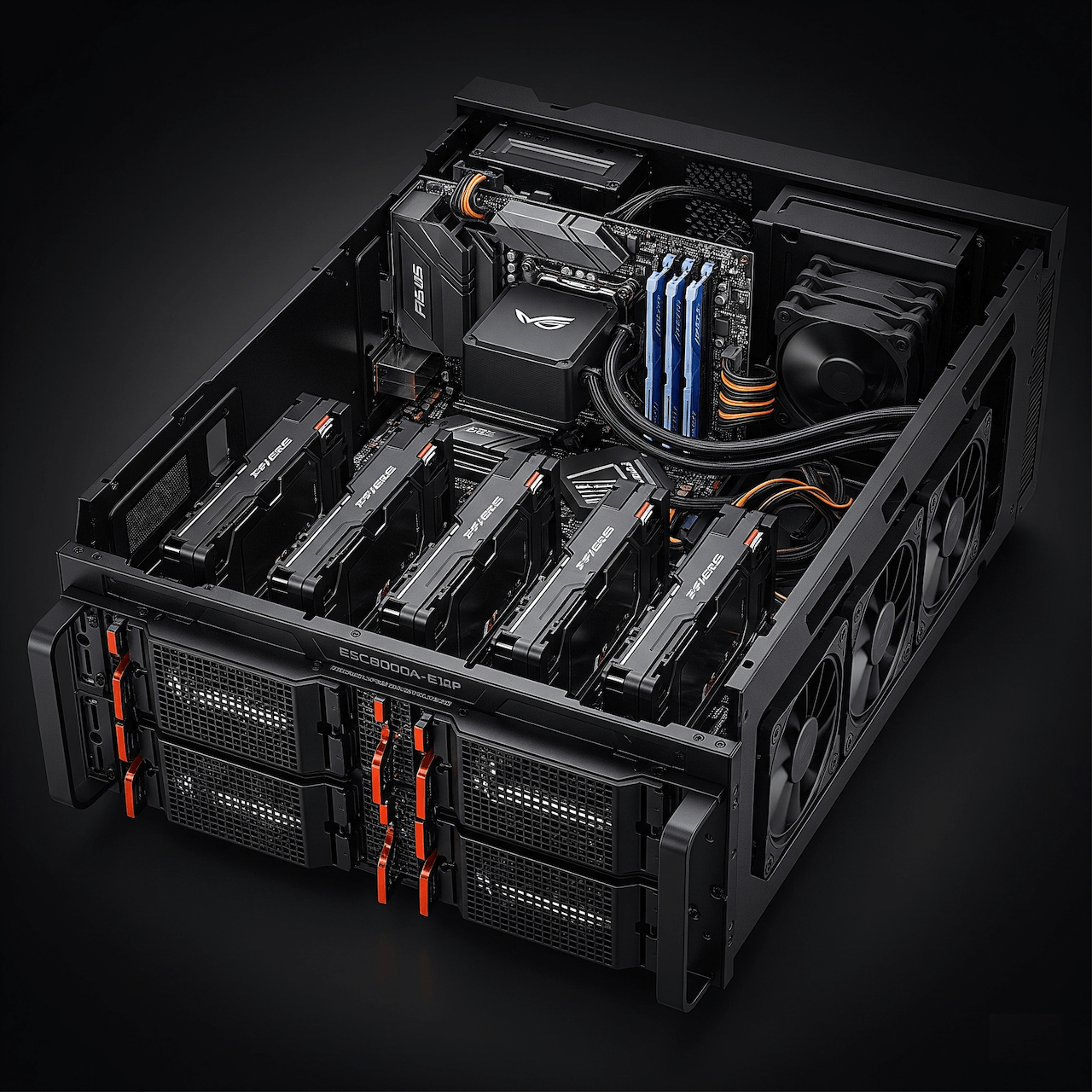

Choosing Your GPUs

Your GPU choice drives everything else about the server design. Different cards have different power requirements, cooling needs, and performance characteristics. The table below breaks down the most popular options to help you make the right choice.

GPU Comparison Guide

Making the Right Choice

For Enterprise AI Training: H100 or H200 provide the best performance but require substantial infrastructure investment. The H100 offers the sweet spot of performance and availability.

For Development and Prototyping: RTX 4090 delivers excellent performance per dollar for smaller teams and individual researchers. Just accept the lack of enterprise features.

For Production Inference: L40S or A100 provide good balance of performance and enterprise features. Consider your specific latency and throughput requirements.

For Mixed Workloads: RTX A6000 offers stability and professional support but at a performance cost. Good for environments that need reliability over raw speed.

For Budget-Conscious Deployments: AMD alternatives can provide good value, but factor in additional software development time and potential compatibility issues.

Key Selection Criteria

Memory Capacity: Large language models and computer vision applications need substantial VRAM. Don't skimp here if you're working with large models.

Power and Cooling: High-end GPUs require serious electrical and cooling infrastructure. Factor these costs into your total budget.

Software Ecosystem: NVIDIA's CUDA platform has the broadest software support. AMD and Intel alternatives are improving but may require additional development effort.

Virtualization Needs: Data center GPUs handle multi-tenancy better than consumer cards. Critical for shared environments or cloud deployments.

Support and Warranty: Enterprise cards come with better support options. Consider this for mission-critical applications where downtime costs money.

The right GPU depends on your specific workload, budget, and infrastructure constraints. Don't automatically choose the most expensive option, but don't penny-pinch on the component that determines your system's performance either.

CPU and System Architecture

The CPU in a GPU server plays a supporting role, but it's still crucial. You need enough CPU cores to feed data to your GPUs and handle system overhead. A good rule of thumb is 2-4 CPU cores per GPU.

High-end server processors like Intel Xeon or AMD EPYC provide the PCIe lanes needed for multiple GPUs. Each modern GPU needs 16 PCIe lanes for full bandwidth. An 8-GPU server requires 128 lanes, which only high-end server platforms can provide.

System memory requirements scale with your workload. Start with at least 16GB of RAM per GPU, but complex workloads might need 32GB or more per card. ECC memory adds cost but prevents data corruption during long training runs.

Power: The Hidden Challenge

Modern GPUs are power-hungry beasts. An NVIDIA H100 can draw 700 watts under full load. An 8-GPU server might need 6-7 kilowatts just for the graphics cards, plus additional power for CPU, memory, and cooling.

Your power supply needs significant headroom above the calculated load. GPU power consumption can spike during certain operations, and you don't want to trip overcurrent protection during important work.

High-efficiency power supplies (80 Plus Titanium or better) reduce waste heat and lower operating costs. The difference between a standard and high-efficiency PSU can save thousands of dollars annually in electricity costs.

Electrical Infrastructure

Most standard office electrical systems can't handle high-end GPU servers. You'll likely need 240V or 480V power distribution, not the standard 120V outlets.

Data centers use power distribution units (PDUs) that can handle the high current loads. Some GPU servers need 30-amp or 50-amp circuits, far beyond what standard electrical systems provide.

Plan for redundancy. Dual power supplies with separate electrical feeds prevent a single power failure from taking down your expensive hardware.

Cooling: Keeping Things Chill

Heat is the enemy of performance and reliability. Modern GPUs can hit 80-90°C under load, and sustained high temperatures reduce lifespan and trigger thermal throttling.

Air Cooling Basics

Traditional air cooling uses fans and heatsinks to move heat away from components. It's simple and cost-effective but has limits in high-density configurations.

GPU servers need substantial airflow. Plan for hot and cold aisles in your data center to prevent hot air recirculation. Cold air enters the front of servers, passes over components, and exits as hot air from the rear.

Fan noise becomes a real issue with multiple high-performance GPUs. What's tolerable for a single card becomes deafening with eight cards running full tilt.

Liquid Cooling Solutions

Liquid cooling handles higher heat loads more efficiently than air cooling. Water has much better thermal capacity than air, allowing smaller, quieter cooling systems.

Direct-to-chip liquid cooling puts cold plates directly on GPU dies, removing heat at the source. This approach works well for high-density installations where air cooling can't keep up.

Closed-loop systems are easier to install and maintain than custom liquid cooling setups. They come pre-filled and sealed, reducing the risk of leaks or maintenance issues.

Immersion Cooling

The most advanced approach submerges entire servers in non-conductive fluid. This method provides uniform cooling across all components and can handle extreme heat densities.

Immersion cooling costs more upfront but can reduce total cooling energy by 50% or more. It also eliminates fan noise and dust problems.

Storage and Networking

GPU servers need fast storage to feed hungry processors. Traditional hard drives can't keep up with modern GPU data requirements. NVMe SSDs provide the bandwidth needed for large datasets and model checkpoints.

Plan storage capacity carefully. AI training generates massive amounts of intermediate data. Model checkpoints, logs, and temporary files can quickly fill terabytes of storage.

Network bandwidth becomes critical for distributed training across multiple servers. Modern GPU clusters use 100 Gigabit Ethernet or InfiniBand for inter-server communication. Even single servers benefit from multiple 10GbE connections for data loading and remote access.

Software Setup and Optimization

Hardware is only half the battle. The software stack determines how well your expensive hardware actually performs.

Operating System Choices

Linux dominates the GPU server space. Ubuntu and CentOS/RHEL are popular choices with good hardware support and extensive documentation. Windows Server works but has fewer optimization options for GPU workloads.

Container platforms like Docker and Kubernetes simplify deployment and scaling. They also provide isolation between different workloads sharing the same hardware.

GPU Drivers and Libraries

NVIDIA's CUDA drivers enable GPU acceleration for most applications. Install the latest stable version unless you have specific compatibility requirements.

Deep learning frameworks like TensorFlow and PyTorch include optimized GPU kernels. Keep these updated for best performance and latest features.

NVIDIA's cuDNN library provides optimized implementations of common neural network operations. It can dramatically improve training performance compared to generic implementations.

Performance Tuning

GPU utilization monitoring helps identify bottlenecks. Tools like nvidia-smi show real-time GPU usage, memory consumption, and temperature data.

Mixed-precision training can double performance on modern GPUs by using 16-bit instead of 32-bit floating-point math. Most frameworks support this automatically with minimal accuracy loss.

Batch size tuning affects both performance and memory usage. Larger batches generally improve GPU utilization but require more memory. Find the sweet spot for your specific workload.

Security Considerations

GPU servers present unique security challenges beyond traditional server concerns. The high value of GPU hardware makes them attractive targets for theft or unauthorized use.

Physical security matters more with expensive GPU hardware. Locked server rooms and access controls prevent unauthorized access to valuable components.

Cryptocurrency mining malware specifically targets GPU resources. Monitor for unexpected GPU usage and implement application whitelisting where possible.

GPU memory can contain sensitive data from previous operations. Some GPUs don't automatically clear memory between tasks, potentially leaking information between different users or applications.

Monitoring and Maintenance

Continuous monitoring prevents small problems from becoming expensive failures. GPU temperatures, power consumption, and utilization patterns reveal developing issues before they cause downtime.

Automated alerting systems can notify administrators of temperature spikes, fan failures, or performance degradation. Early warning prevents thermal damage and extends hardware lifespan.

Regular maintenance includes cleaning dust from heatsinks and fans, checking thermal paste on direct-contact cooling systems, and updating drivers and firmware.

Cost Optimization

GPU servers represent significant investments, often costing hundreds of thousands of dollars. Smart planning maximizes return on investment.

Avoid over-provisioning. An 8-GPU server sitting mostly idle wastes money that could buy additional smaller systems for better utilization.

Consider your actual workload patterns. Burst computing needs might be better served by cloud GPU instances rather than owned hardware.

Electricity costs add up quickly with high-power GPU servers. A single H100-based server can cost $10,000+ annually in electricity at typical commercial rates.

Cooling costs often equal or exceed the direct power consumption of the hardware. Efficient cooling design reduces total operational costs.

Cloud GPU services eliminate upfront capital costs but charge premium rates for compute time. They make sense for variable workloads or when you need access to the latest hardware without buying it.

On-premises hardware provides better economics for steady, predictable workloads. The break-even point typically occurs around 6-12 months of continuous usage.

Future-Proofing Your Investment

GPU technology evolves rapidly. Today's cutting-edge hardware becomes yesterday's news within 2-3 years. Plan for this obsolescence in your purchasing decisions.

Modular designs allow component upgrades without replacing entire systems. Choose motherboards and power supplies that can handle next-generation GPUs.

Software compatibility matters for long-term value. Stick with widely-supported platforms and avoid vendor-specific solutions that might not survive technology transitions.

Common Pitfalls to Avoid

Underestimating power requirements is the most common mistake. Always calculate total system power draw and add 20-30% headroom for safety and efficiency.

Inadequate cooling leads to thermal throttling and reduced performance. Plan cooling capacity for worst-case scenarios, not typical loads.

Ignoring network bandwidth creates bottlenecks that waste GPU performance. Fast storage and networking are just as important as fast GPUs.

Skipping monitoring and alerting systems leads to preventable failures. The cost of monitoring tools is trivial compared to replacing failed hardware or losing work to system crashes.

Building Your First GPU Server

Start with a clear understanding of your workload requirements. Different applications have different needs for GPU memory, compute performance, and interconnect bandwidth.

Choose proven, well-supported hardware combinations. Exotic configurations might save money upfront but cost more in troubleshooting time and compatibility issues.

Plan for growth but don't over-engineer the initial system. It's often better to start smaller and expand based on actual usage patterns rather than guessing at future needs.

Test thoroughly before deploying production workloads. Run stress tests to verify cooling performance, power consumption, and system stability under full load.

The investment in a well-designed GPU server pays dividends in faster results, reduced waiting times, and the ability to tackle problems that were previously impossible. Take the time to get it right, and you'll have a system that serves you well for years to come.

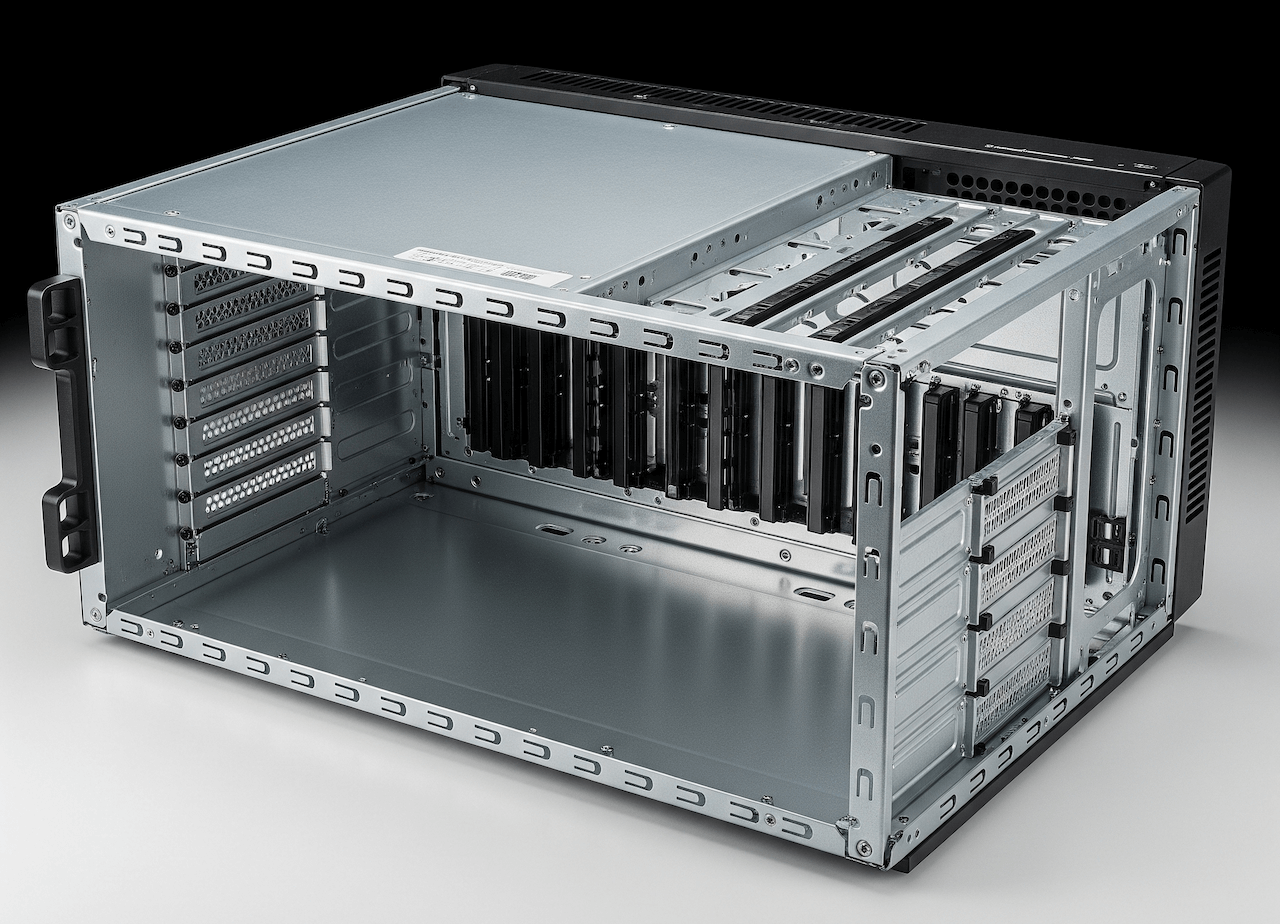

Building GPU Server Step by Step

Building your first GPU server can feel overwhelming, but breaking it down into logical steps makes the process manageable. This guide assumes you're building a mid-range system with 2-4 GPUs, perfect for most AI development and small-scale production workloads.

Quick Reference Checklist

Use this checklist to track your progress and understand what each step involves:

Step 1: Define Your Requirements

Start with honest answers to these questions. Write them down, you'll reference them throughout the build.

What specific workloads will you run? AI training needs different specs than rendering or scientific computing. Be specific about model sizes, dataset requirements, and expected usage patterns.

How many people will use the system? Single-user setups have different requirements than shared research environments. Multi-user systems need more RAM and storage.

What's your realistic budget? Include not just hardware costs but electrical work, cooling upgrades, and ongoing power costs. A $50,000 hardware budget might need another $10,000 for infrastructure.

Step 2: Calculate Power Requirements

This step prevents expensive surprises later. Get out a calculator and be thorough.

List every component's power draw. GPUs are the biggest consumers, but don't forget CPU (150-300W), motherboard (50W), RAM (5W per stick), storage (10W per drive), and fans (5-15W each).

Add 25% headroom to your total. Power supplies run most efficiently at 50-80% load, and you want buffer for power spikes during intensive operations.

Check your electrical service. Most homes have 200-amp service, but older buildings might have less. A 4-GPU server can easily need 30-40 amps of 240V power.

Step 3: Plan Your Space

GPU servers are loud, hot, and need good ventilation. Your spare bedroom probably won't work for anything beyond a single-GPU development system.

Measure your intended location. Server cases are deeper than desktop towers, often 24-30 inches. Allow extra space for cable management and airflow.

Consider noise levels. Multiple high-performance GPUs sound like a jet engine. If you're building in an office space, plan for acoustic isolation or a separate machine room.

Check ventilation requirements. You'll need to move a lot of hot air. A 4-GPU system can dump 2-3kW of heat into a room, requiring substantial air conditioning.

Step 4: Order Components

Don't order everything at once unless you're confident in your design. Start with the case, power supply, and motherboard to verify fit and compatibility.

Order GPUs last if possible. Prices fluctuate rapidly, and you want the latest drivers and firmware. Plus, GPUs are the most likely components to be backordered.

Buy quality cables. Cheap PCIe power cables can cause voltage drops and instability. Get cables rated for your power supply's full output.

Consider spare parts. Extra fans, thermal paste, and cables save time when something fails during testing.

Step 5: Unbox and Inspect Everything

Don't rush this step. Damaged components cause headaches later, and return windows are limited.

Check each component against your order list. Verify model numbers, especially for RAM and storage where specifications matter.

Inspect for physical damage. Look for bent pins on CPUs, cracked PCBs, and damaged connectors. Take photos of any issues for warranty claims.

Test power supplies before installation. Most quality PSUs include a paperclip test procedure. Better to find a dead PSU on your workbench than after everything's installed.

Step 6: Verify Compatibility

Double-check that everything actually works together. Compatibility issues are easier to resolve before assembly.

Confirm your motherboard supports your CPU. Check the manufacturer's CPU compatibility list, not just the socket type. BIOS updates might be required for newer processors.

Verify RAM compatibility. Check your motherboard's qualified vendor list (QVL) for your specific RAM kit. ECC memory requires motherboard support.

Ensure adequate PCIe slots and lanes. Count carefully, modern GPUs need x16 slots and full bandwidth for optimal performance.

Check clearances. Measure GPU length against case specifications. Some cards are over 12 inches long and won't fit in compact cases.

Step 7: Prepare Your Workspace

Set up a clean, well-lit area with plenty of space. You'll be handling expensive components, so take your time.

Use an anti-static wrist strap or touch a grounded metal object frequently. Static electricity can damage sensitive components.

Organize your tools. You'll need head screwdrivers (magnetic tips help), zip ties for cable management, and thermal paste if not included with your CPU cooler.

Have your motherboard manual handy. You'll reference it constantly for connector locations and jumper settings.

Step 8: Install the Power Supply

Start with the PSU since it's heavy and awkward to install later.

Orient the fan correctly. In most cases, the fan should face down to draw cool air from outside the case. Some cases require upward orientation.

Use all four mounting screws. Power supplies are heavy, and vibration can loosen inadequate mounting.

Don't connect any cables yet. Wait until other components are installed to avoid cable management nightmares.

Step 9: Prepare the Motherboard

Install the CPU, RAM, and M.2 storage on the motherboard before mounting it in the case. It's much easier to work on a flat surface.

Install the CPU carefully. AMD and Intel have different mechanisms, but both require gentle handling. The CPU should drop into place without force.

Apply thermal paste if your cooler doesn't include pre-applied paste. Use a rice grain-sized amount in the center of the CPU. The mounting pressure will spread it evenly.

Install RAM in the correct slots. Most motherboards want you to use slots 2 and 4 first for dual-channel operation. Check your manual.

Install M.2 drives now if you're using them. The mounting screws are tiny and easy to lose once the motherboard is in the case.

Step 10: Mount the Motherboard

Install I/O shield first. It only goes in one way, but it's easy to forget until the motherboard is already mounted.

Use standoffs in the correct positions. Extra standoffs can short the motherboard, while missing standoffs can cause flexing and damage.

Don't overtighten screws. Snug is sufficient, overtightening can crack the PCB or strip threads.

Connect the 24-pin power connector and CPU power connector before installing GPUs. These cables are stiff and hard to route around large graphics cards.

Step 11: Install Storage and Cooling

Install any 2.5" or 3.5" drives in their bays. Use all mounting screws to prevent vibration noise.

Install case fans if not pre-installed. Plan your airflow pattern: cool air in the front, hot air out the back and top.

Connect fan headers to the motherboard. Most motherboards have multiple fan headers with different control options.

Test your cooling setup before installing GPUs. Run the system and check temperatures under light load.

Step 12: Install Your First GPU

Start with one GPU to verify basic functionality before installing multiple cards.

Remove the appropriate slot covers from the case. You'll typically need two slots per GPU.

Seat the GPU firmly in the top PCIe x16 slot. You should hear a click when the retention clip engages.

Secure the GPU with screws to the case bracket. Don't rely only on the PCIe slot for mechanical support.

Connect PCIe power cables. Modern GPUs need one or two 8-pin connectors. Use separate cables from the PSU, not daisy-chained connectors.

Step 13: First Boot

Connect a monitor to the GPU, not the motherboard's video output. The motherboard video might be disabled when a GPU is installed.

Connect keyboard, mouse, and network cable. You'll need these for initial setup.

Double-check all power connections. Loose connections cause mysterious boot failures.

Press the power button and cross your fingers. If nothing happens, check the front panel connectors. These tiny connectors are easy to get wrong.

Step 14: BIOS Setup

Enter BIOS setup during boot (usually Delete or F2 key). Modern UEFI interfaces are much friendlier than old text-based BIOS.

Enable XMP/DOCP for your RAM. This ensures your memory runs at rated speeds instead of conservative defaults.

Check CPU and GPU temperatures. They should be reasonable at idle (30-50°C for CPU, 30-40°C for GPU).

Verify all components are detected. Check that your RAM capacity, storage drives, and GPU are all recognized.

Set boot priority to your installation media. You'll need this for operating system installation.

Step 15: Stress Testing

Run initial stress tests before installing additional GPUs. It's easier to troubleshoot with fewer variables.

Use Prime95 or similar to stress the CPU. Monitor temperatures and ensure the system remains stable.

Run FurMark or similar GPU stress test. Watch temperatures and listen for unusual fan noise.

Test memory with MemTest86. Let it run for several passes to catch intermittent errors.

Monitor power consumption with a kill-a-watt meter. Verify your calculations were accurate.

Step 16: Install Additional GPUs

Power down completely and unplug the system. Adding GPUs to a powered system can damage components.

Install GPUs in the remaining PCIe x16 slots. Space them out if possible to improve cooling.

Connect power cables to each GPU. High-end cards need substantial power, don't skimp on cable quality.

Verify adequate clearance between cards. Some configurations leave minimal space for airflow.

Step 17: Configure Multi-GPU Setup

Boot and verify all GPUs are detected. Check Device Manager on Windows or lspci on Linux.

Install the latest GPU drivers. Download directly from NVIDIA or AMD, not from Windows Update.

Configure SLI/CrossFire if needed for gaming workloads. Most AI frameworks handle multiple GPUs automatically.

Test each GPU individually. Run stress tests on one card at a time to isolate any issues.

Step 18: Thermal Testing

Run all GPUs under full load simultaneously. This is the real test of your cooling design.

Monitor temperatures carefully. GPUs should stay below 80-85°C under sustained load.

Check for thermal throttling. Performance should remain consistent during extended testing.

Adjust fan curves if necessary. More aggressive cooling might be needed for multi-GPU configurations.

Step 19: Operating System Installation

Choose your OS based on your workload. Linux offers better performance for most AI applications, Windows provides easier management for mixed workloads.

Create installation media on another computer. Download the latest version for best hardware support.

Install to your fastest storage device. NVMe SSDs provide the best boot and application loading times.

Configure RAID if using multiple drives. RAID 0 for performance, RAID 1 for redundancy, or RAID 10 for both.

Step 20: Driver Installation

Install motherboard chipset drivers first. These provide basic system functionality and USB support.

Install GPU drivers next. Use the latest version unless you have specific compatibility requirements.

Install monitoring software. GPU-Z, HWiNFO64, or similar tools help track temperatures and performance.

Configure automatic driver updates carefully. Automatic updates can break working configurations.

Step 21: Framework Installation

Install CUDA toolkit for NVIDIA GPUs. This provides the foundation for most AI frameworks.

Install Python and your preferred AI frameworks. Anaconda provides a good starting point with pre-configured environments.

Install Docker if you plan to use containers. GPU support requires additional configuration but provides excellent isolation.

Test GPU acceleration with simple examples. Verify that frameworks can actually use your GPUs before diving into complex projects.

Step 22: Performance Tuning

Benchmark your system with realistic workloads. Synthetic benchmarks don't always reflect real-world performance.

Tune memory timings if you're comfortable with advanced settings. Tighter timings can improve performance but require stability testing.

Optimize power settings. High-performance power plans prevent CPU throttling during intensive workloads.

Configure GPU boost settings. Modern GPUs automatically overclock themselves, but you can adjust the limits.

Step 23: Monitoring Setup

Install comprehensive monitoring software. You want to track temperatures, power consumption, and utilization over time.

Set up alerting for critical thresholds. High temperatures or fan failures need immediate attention.

Create performance baselines. Document normal operating parameters for future troubleshooting.

Schedule regular health checks. Automated testing can catch developing problems before they cause failures.

Step 24: Final Validation

Run extended stress tests on the complete system. 24-48 hours of continuous operation reveals stability issues.

Test power failure recovery. Verify the system boots properly after unexpected shutdowns.

Document your configuration. Save BIOS settings, driver versions, and software configurations for future reference.

Create a maintenance schedule. Regular cleaning and monitoring prevent small problems from becoming expensive failures.

Common Gotchas and How to Avoid Them

Power Supply Sizing: Always oversize your PSU. A 1000W system needs at least a 1200W power supply for efficiency and headroom.

Cooling Airflow: Plan your airflow pattern before installing components. Hot air from one GPU shouldn't blow directly into another.

Cable Management: Route power cables away from fans and heat sources. Melted cables cause fires and system failures.

Driver Conflicts: Uninstall old drivers completely before installing new ones. Use DDU (Display Driver Uninstaller) for clean installations.

Thermal Paste Application: Less is more. Too much thermal paste actually reduces cooling performance.

ESD Protection: Static electricity kills components silently. Use proper grounding techniques throughout the build.

BIOS Updates: Update BIOS only if necessary. Failed BIOS updates can brick your motherboard.

Component Compatibility: Double-check everything. Incompatible components waste time and money.

When to Call for Help

Don't be afraid to ask for assistance. GPU servers represent significant investments, and professional help costs less than replacing damaged components.

Electrical Work: Hire a licensed electrician for 240V circuits and high-amperage installations. Electrical fires aren't worth the savings.

Cooling Design: Consult HVAC professionals for room cooling requirements. Inadequate cooling reduces performance and shortens component life.

Professional Assembly: Consider professional assembly for your first build. Watching an expert work teaches valuable techniques.

Warranty Service: Use manufacturer warranty service for component failures. DIY repairs often void warranties.

Building a GPU server is challenging but rewarding. Even though we have tried to cover every possible apect, you must carefully read the instruction manual and guides supplied by the component suppliers. Take your time, double-check everything, and don't rush the process. A well-built system will serve you reliably for years and provide the computational power to tackle problems that were previously impossible.

Congratulations! You've successfully assembled the most futuristic and powerful GPU server.